iOS App Dev: Image Filter and Analysis with Core Image

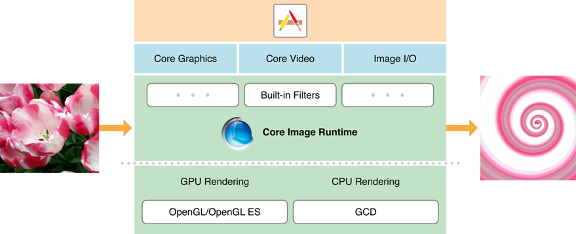

Whenever you are planning to create an app or service related to image/photo editing, Core Image is the framework you have to rely on. Let's talk about the Core Image concept first. Core Image Core Image is a framework to provide support for image processing and analysis. Core Image works on Images from Core Graphics, Core Video, I/O Image framework using GPU or CPU rendering path. As a developer, you can interact with high-end frameworks like Core Image, without knowing low-level processing details of OpenGL or GPU or Metal or even GCD multicore processing. The following image describes the interaction process of Core Image with running Apps and the OSs. Overall, Core Image provides the features to developers like, Access to built-in image processing filters Feature detection capability Support for automatic image enhancement The ability to chain multiple filters together to create custom effects Support for creating custom filters that run on a GPU F...

Comments

Post a Comment