iOS App Dev: Image Filter and Analysis with Core Image

Whenever you are planning to create an app or service related to image/photo editing, Core Image is the framework you have to rely on. Let's talk about the Core Image concept first.

Core Image

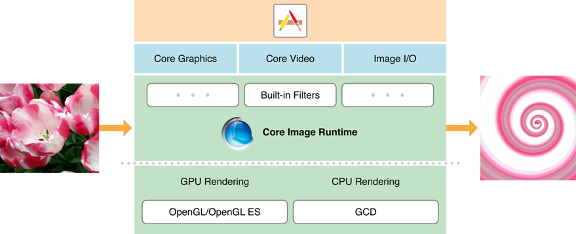

Core Image is a framework to provide support for image processing and analysis. Core Image works on Images from Core Graphics, Core Video, I/O Image framework using GPU or CPU rendering path. As a developer, you can interact with high-end frameworks like Core Image, without knowing low-level processing details of OpenGL or GPU or Metal or even GCD multicore processing.

The following image describes the interaction process of Core Image with running Apps and the OSs.

Overall, Core Image provides the features to developers like,

- Access to built-in image processing filters

- Feature detection capability

- Support for automatic image enhancement

- The ability to chain multiple filters together to create custom effects

- Support for creating custom filters that run on a GPU

- Feedback-based image processing capabilities

While using Core Image, you may have to use three basic classes from this framework.

- CIImages: A representation of an image to be processed or produced by Core Image filters.

- CIFilter: An image processor that produces an image by manipulating one or more input images or by generating new image data.

- CIContext: An evaluation context for rendering image processing results and performing image analysis.

Core Image Filter

Let’s implement the in-built image filtering, using the Core Image framework. Assume that, you have a running Xcode project and one of the Storyboard scene contain an Image View and a couple of buttons with image filter names, say Saphia tone, Comic and /or Invert color. You are may apply other effects as well and a list of image effects provided by the Core Image framework can access through this link.

A common button action for all these Buttons can be looks like this:

@IBAction func filterButtonTapped(_ sender: UIButton) {

applyEffect(title: sender.titleLabel!.text!)

}

And the implementation of the applyEffect(title:) function would be:

func applyEffect(title:String){

let image = UIImage(named: "image")!

let context = CIContext(options: nil)

let filterName:String

switch title {

case "Sepia Tone": filterName = "CISepiaTone"

case "Comic Effect": filterName = "CIComicEffect"

default: filterName = "CIColorInvert"

}

if let filter = CIFilter(name: filterName) {

let workingImage = CIImage(image: image)

filter.setValue(workingImage, forKey: kCIInputImageKey)

if filterName == "CISepiaTone"{

filter.setValue(0.5, forKey: kCIInputIntensityKey) // Intensity range: 0 to 1

}

if let output = filter.outputImage {

if let cgImg = context.createCGImage(output, from: output.extent) {

let changedImage = UIImage(cgImage: cgImg)

imageView.image = changedImage

}

}

}

}

Remember, by default the images we are using for an Image View are in UIImage type. But CIFilter will work on CIImage. Therefore, convert the image into CIImage and name it “workingImage”. Now we can use the "workingImage" to assign the filter to it along with the intensity of the effect.

Now the time to render the image with all effects using CIContext, called “context”. Using this context, create a CGImage from CIFilter’s output image. Once successful, add the CGImage, here “cgImg”, into the Image View, but by converting back to UIImage, here “changedImage”.

That’s it, run the App in the simulator and tap on the desire filter button. In bellow image, it shows the Comic effect.

Core Image Analysis

There are image attributes detection features available with Core Image. These detection functionalities are helpful to analyze a particular image. For example, we have an Image with text content into it. You can check whether the image contains any face or not, the position of the face, the mouth, the eyes, etc. Even whether the image has text or not and so on. Apple has a document on different CIDetector types, urging you to go through it from the link below.

Let's implement such concept. Inside a new button action, you may implement bellow code into that.

Comments

Post a Comment